In today’s rapid development cycle, deploying, scaling, and managing applications efficiently is crucial. Docker addresses this by packaging applications into isolated, lightweight “containers.” This powerful platform ensures your application “always runs the same, regardless of the infrastructure,” eliminating the infamous “it works on my machine” problem.

Why Docker is So Flexible

Docker’s flexibility comes from key features:

Portability: Build an image once, run it anywhere Docker is installed (local, testing, cloud).

Isolation & Security: Containers run in loosely isolated environments, preventing interference and enhancing security. You can run many containers on a single host without conflicts.

Efficiency: Containers are lightweight, sharing the host OS kernel, leading to faster startups and lower resource consumption compared to VMs.

Scalability: Easily spin up multiple application instances by launching more containers, perfect for microservices.

Version Control for Environments: Dockerfiles act as blueprints, allowing you to version control your application’s environment.

Getting Started Fast: Docker Pull for PostgreSQL

The quickest way to experience Docker is by pulling a pre-built image from Docker Hub. For PostgreSQL, it’s incredibly simple:

- Pull the image:

docker pull postgres:13-alpine

- Run the container:

docker run -p 5432:5432 --name my-postgres-db -e POSTGRES_PASSWORD=your_strong_password -d postgres:13-alpine

This command downloads PostgreSQL 13, maps your computer’s port 5432 to the container’s 5432, sets a password, names the container, and runs it in the background.

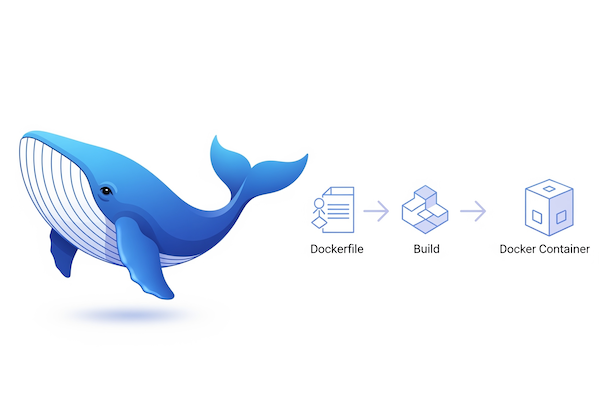

Demystifying the Dockerfile: Building Your Custom Container

For more control, a Dockerfile is your recipe for building custom Docker images. It’s a text file with instructions for Docker to follow.

Here’s a basic Dockerfile for PostgreSQL:

# Use an official PostgreSQL base image

FROM postgres:13-alpine

# Set environment variables for PostgreSQL

ENV POSTGRES_DB=mydatabase

ENV POSTGRES_USER=myuser

ENV POSTGRES_PASSWORD=mypassword

# Expose the default PostgreSQL port (5432)

EXPOSE 5432

# Optional: Copy custom SQL scripts to run on first startup

# COPY init.sql /docker-entrypoint-initdb.d/

Key Instructions Explained:

- FROM postgres:13-alpine: Specifies the base image. postgres is the official image, 13-alpine indicates version 13 on a lightweight Alpine Linux distribution, which results in smaller images and faster downloads.

- ENV POSTGRES_DB=mydatabase: Sets environment variables for PostgreSQL configuration during initial setup (database name, user, password).

- EXPOSE 5432: Informs Docker the container listens on port 5432. This is documentation; you still need to publish the port when running the container (as shown in the docker run example).

- COPY init.sql /docker-entrypoint-initdb.d/: (Commented out) If uncommented, this would copy an init.sql file from your local machine into the image, allowing PostgreSQL to execute it on first startup for schema creation or data loading.

Orchestrating with Docker Compose: Multi-Container Magic

While Dockerfiles manage single containers, real-world applications often involve multiple services (e.g., a web app, a database, a cache). Docker Compose simplifies managing these multi-container applications using a single docker-compose.yml file.

This approach defines all your services, networks, and volumes in one place, allowing you to bring up your entire application stack with a single command. It’s ideal for complex setups requiring persistent volumes, secrets, and custom command overrides.

Example docker-compose.yml for a robust PostgreSQL setup:

# docker-compose.yml

services:

postgres:

image: postgres:17.4

container_name: postgres

hostname: postgres

environment:

POSTGRES_USER_FILE: /run/secrets/pg_user

POSTGRES_DB: postgres

POSTGRES_PASSWORD_FILE: /run/secrets/pg_pw

TZ: America/Chicago

PGTZ: America/Chicago

secrets:

- pg_user

- pg_pw

volumes:

- ./data:/var/lib/postgresql/data # Persistent data storage

- ./logs:/var/log/postgresql # PostgreSQL logs

- ./certs:/var/lib/postgresql/certs # SSL certificates

command: > # Custom PostgreSQL startup command

postgres -c ssl=on

-c ssl_cert_file=/var/lib/postgresql/certs/server.crt

-c ssl_key_file=/var/lib/postgresql/certs/server.key

-c logging_collector=on

-c log_directory=/var/log/postgresql

# ... (other logging and performance settings)

restart: unless-stopped

network_mode: "host" # Accessible via localhost; for production, map specific ports

secrets:

pg_user:

file: ./secrets/pg_user.txt

pg_pw:

file: ./secrets/pg_pw.txt

Steps to use Docker Compose:

-

Install Docker Desktop: It includes Docker Compose.

-

Create project structure:

mkdir my-postgres-app && cd my-postgres-app

mkdir data logs certs secrets

- Create secret files: (Remember, don’t commit these to public repos!)

echo "your_pg_username" > secrets/pg_user.txt

echo "your_strong_password" > secrets/pg_pw.txt

- Generate SSL Certificates: (For local SSL, in my-postgres-app dir)

openssl genrsa -out certs/server.key 2048

openssl req -new -key certs/server.key -out certs/server.csr -subj "/CN=localhost"

openssl x509 -req -days 365 -in certs/server.csr -signkey certs/server.key -out certs/server.crt

-

Create docker-compose.yml: Paste the YAML content above into this file.

-

Start the service:

docker compose up -d

- Verify:

docker ps # Should show 'postgres' container running

- Connect: Your PostgreSQL database will be accessible on localhost:5432 with the user/password from your secret files and SSL enabled.

Bonus: Docker Resources

Installation

- Docker Desktop is available for Mac, Linux and Windows: https://docs.docker.com/desktop

- View example projects that use Docker: https://github.com/docker/awesome-compose

- Check out docs for information on using Docker: https://docs.docker.com

General docker Commands

- Start the docker daemon:

docker -d(Note: Docker Desktop typically starts the daemon automatically) - Get help with Docker. Can also use –help on all subcommands:

docker --help - Display system-wide information:

docker info

Images

Docker images are a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

- Build an Image from a Dockerfile:

docker build -t <image_name>. - Build an Image from a Dockerfile without the cache:

docker build -t <image_name> . --no-cache - List local images:

docker images - Delete an Image:

docker rmi <image_name> - Remove all unused images:

docker image prune - Publish an image to Docker Hub:

docker push <username>/<image_name> - Pull an image from a Docker Hub:

docker pull <image_name> - Search Hub for an image:

docker search <image_name>

Containers

A container is a runtime instance of a docker image. A container will always run the same, regardless of the infrastructure. Containers isolate software from its environment and ensure that it works uniformly despite differences for instance between development and staging.

- Create and run a container from an image, with a custom name: docker run –name <container_name> <image_name>

- Run a container with and publish a container’s port(s) to the host (for our PostgreSQL example):

docker run -p 5432:5432 --name my-postgres-db -d postgres:13-alpine(This maps host port 5432 to container port 5432, names the container my-postgres-db, and runs it in the background.) - Run a container in the background:

docker run -d <image_name> - Start or stop an existing container:

docker start|stop <container_name> (or <container-id>) - Remove a stopped container:

docker rm <container_name> - Open a shell inside a running container:

docker exec -it <container_name> sh - Fetch and follow the logs of a container:

docker logs -f <container_name> - To inspect a running container:

docker inspect <container_name> (or <container_id>) - To list currently running containers:

docker ps - List all docker containers (running and stopped):

docker ps --all - View resource usage stats:

docker container stat

Docker Hub

Docker Hub is a service provided by Docker for finding and sharing container images with your team. Learn more and find images at https://hub.docker.com

Login into Docker Hub: docker login -u <username>

Docker Hub allows you to:

Discover Official Images: Find well-maintained images from vendors and communities (like postgres). Pull Community Images: Download images built by other developers. Share Your Own Images: Publish your containerized applications for others to use.

This platform significantly accelerates development and deployment by providing a vast repository of pre-built solutions.

Conclusion

Docker has fundamentally changed application development and deployment. Its isolated, portable, and lightweight containers offer unmatched flexibility and consistency. By leveraging Dockerfiles for custom builds, utilizing Docker Hub’s vast resources, and mastering Docker Compose for multi-service applications, you’re well-equipped to streamline your development workflow and deploy applications with unprecedented efficiency.